Deploying Magento2 – Releasing to Production [3/4]

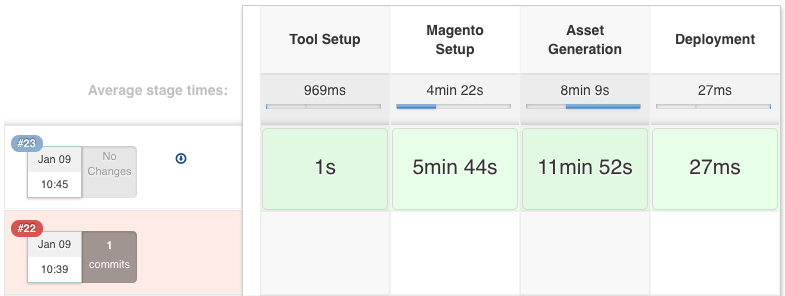

This post is part of series: History and Overview of Magento2 Deployment Jenkins Build-Pipeline Setup (building assets, controlling the deployment) Releasing to Production (delivering code and assets, managing releases) Future Prospect (cloud deployment, artifacts) Recap In the last post Jenkins Build-Pipeline Setup we had a look at our Jenkins Build-Pipeline and how to the setup and configuration is done. If you haven’t read it yet you should probably do so before reading this post. The last step in our Build-Pipeline was the actual Deployment…